When and When not to Use AI for CEO Communication

Each use is not the same and some uses come with greater risk

AI isn’t ideal or recommended for every communication purpose and exchange.

It likely will become more tempting and used even when there is risk involved.

Harvard Business Review addressed this important concern in an article in its May-June issue: Why CEOs Should Think Twice Before Using AI to Write Messages.

Interesting and useful findings from a study included:

“When people thought an answer was created by AI, they rated it as less helpful even if it came from a human.

“Conversely, when they thought a CEO had given an answer, they found it more helpful even if it was from AI.”

Knowing this upfront may help you make adjustments if you use AI to increase the probability of having your communication judged credible and beneficial.

A woman I used to know liked to say, “people are funny.” After I heard her say it a few times, including once about me, I had a hunch and asked her about it.

“When you say funny, do you mean that people are weird,” I inquired.

“Yes, that’s what I’m saying,” she replied.

The same applies here about people and their convictions about AI. If you don’t like the interpretation of weird, you can think, “different” or “funny.”

Our minds believe human answers, in more situations than not, are more honest and better than AI-generated ones. Whether that conclusion is factual or perceived, what people naturally assume or presume is what is often more important.

“In simple terms,” HBR wrote, “people place more trust in and find more value in statements they believe come from a human rather than technology. This suggests that while AI can produce helpful information, people’s perceptions play a big role in how it is received.”

The new reality is that most leaders will, according to the publication, “use gen AI in some form or fashion.”

Research backs that up: “Fifty percent of U.S. CEOs say their companies have already automated content creation with it, according to a 2024 Deloitte survey,” and that “Seventy-five percent say they have personally used or are using the technology.”

It makes sense for efficiency sakes. It also means that poor judgment will be exercised.

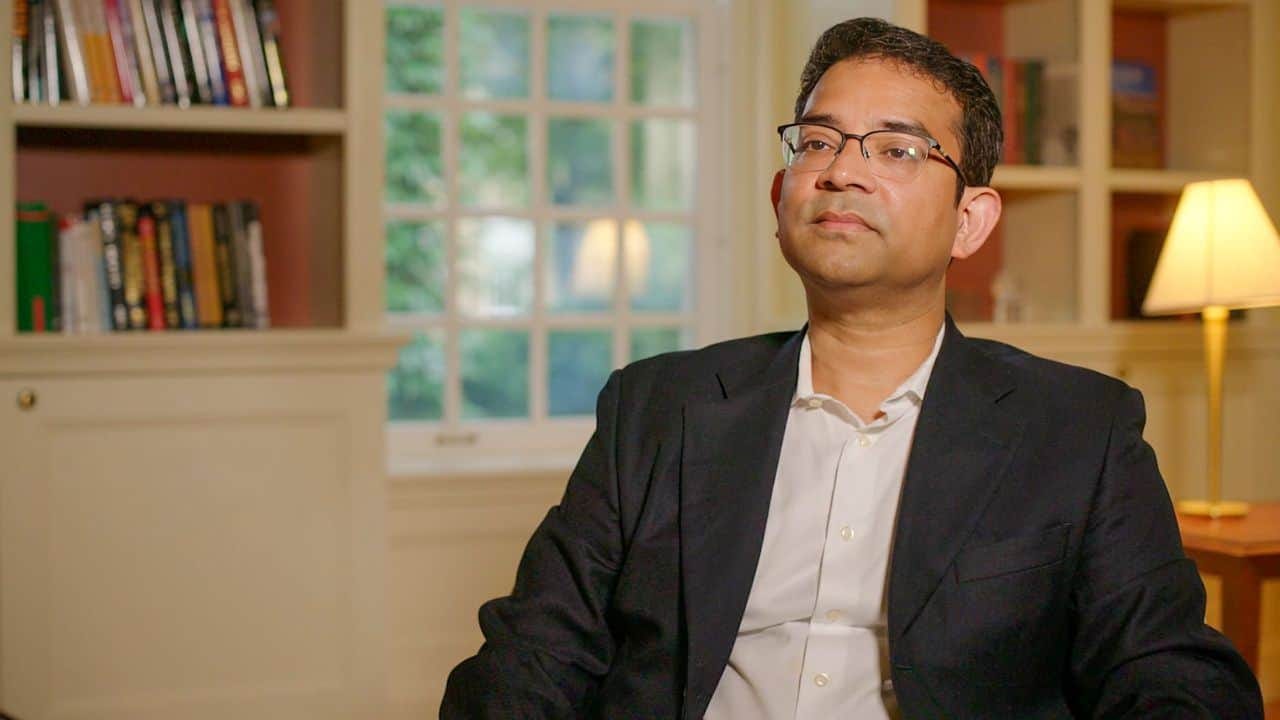

Risks will be taken, sometimes recklessly, so Prithwiraj Choudhury, a Harvard Business School professor, offered three guidelines for CEO AI messaging use.

1. Be transparent

“If your employees discover that you’ve outsourced your communications without telling them, they may start to believe every message you send is drafted by a bot,” HBR wrote.

“Transparency is essential to building trust and allaying people’s aversion to gen AI.”

People still want to know who really is talking to them, especially if you’re putting your name on that communication or if its about a sensitive matter.

2. Use AI for impersonal messaging

“The technology is more effective for formal communications, such as shareholder letters or strategy memos, according to Choudhury’s research,” per HBR. “Avoid it for personal communications, especially with people who know you well.”

This is a small, yet important, reminder. Less is expected from people with AI communications when it’s used for highly-formal communications.

Personal communications are different because people may feel you are not treating them as human beings worthy of your time and a genuine, interpersonal interaction.

3. Triple-check your work

“Rather than simply copying and pasting AI’s responses into a document, review and fact-check every word, especially for important and sensitive messages,” HBR recommends.

“You should also ask an editor to review your technology-generated communications (or any communications, really) to ensure their meaning, tone and personality align with what you intended.”

Choudhury explains what is top of mind at this point.

“Gen AI is a great tool that will save CEOs a lot of time but I don’t think you can let it run completely on its own,” he stated. “Even if it answers only one question incorrectly, you will suffer huge unintended consequences.”

As someone who has missed needed edits at times, it can be a big embarrassment, whether in media or business communications. It doesn’t reflect positively on us and as a CEO, the expectations are much higher and the stakeholders more critical.

AI in all or even some communications may not viewed as ethical. It might be harshly judged and possibly, prove highly upsetting. It’s not worth the risk to credibility, trust and relationships. Judicious use is smarter and safer and is good risk management.

If using AI, labeling it as such is a form of respectful transparency that may prevent backlash. Consider that errors not caught are additionally going to be a bad look and bring who knows what sort of costs your way. Fact check. Precisely.

AI is a tool, one that can be respected, if applied correctly and responsibly used or it can cause serious, self-inflicted problems.

This newsletter normally publishes Tuesday, Thursday and Sunday, with occasional articles on other days. To advertise, link to your business, sponsor an article or section of the newsletter or discuss your affiliate marketing program, contact CI.